Perhaps you’ve heard an audio deepfake of a politician on social media done for laughs – maybe it’s former President Trump singing ABBA’s “Dancing Queen” or President Biden talking about the Barbie movie – but radio stations should be very careful when it comes to deepfake advertising.

The surge in the use of artificial intelligence this year has changed the technological landscape, but it soon could cause massive legal and financial headaches throughout the upcoming presidential primary and election cycle.

A new wave of AI-driven advertising has seen attack ads from a Super PAC against former President Trump use a synthetic clone of his voice to recite a tweet critical of Iowa’s governor. Similar tactics have been employed against other political figures. Last month, the Federal Election Commission deliberated over a petition by Public Citizen, a public interest group, demanding regulation of these AI-driven ads. However, no decisive national action has yet been taken by the FEC.

Legislation is currently under consideration in both the Senate and the House of Representatives. This proposed bill mandates that all political ads, which employ artificial intelligence for generating images or video, must include disclaimers disclosing their AI-generated nature.

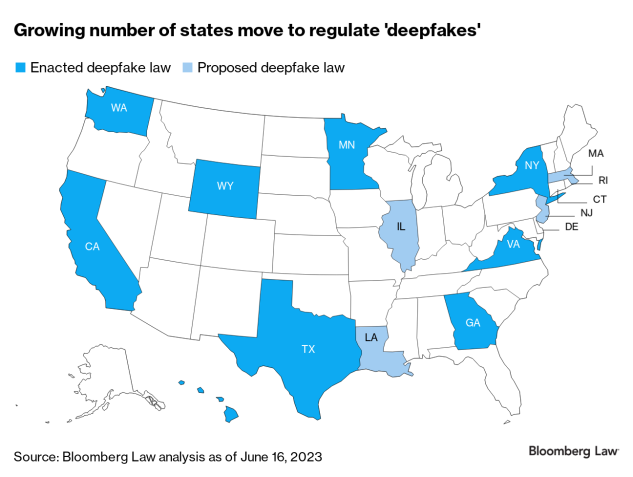

Meanwhile, some states have already put their own measures in place to deal with this emerging technology. Ahead of the 2020 election, Texas enacted penalties for non-disclaimed AI-manipulated political ads aired within 30 days of an election. In California, it was the same but from 60 days out. Those laws, and the fast-moving nature of AI regulation, were discussed in a recent Bloomberg Law article.

In the Lone Star State, breaking that political deepfake law (TXSB 751) is a Class A misdemeanor, punishable by up to a year in jail and a fine up to $4,000. California’s law appears to have sunset on January 1 of this year, a further show of volatility.

For radio, these evolving dynamics present both regulatory and potential liability issues. While AI-generated content increases the risk of defamation and misinformation, regulatory expectations remain vague and open to interpretation.

In a recent Broadcast Law Blog, attorney David Oxenford wrote, “Media companies need to assess these regulatory actions and, like so many other state laws regulating political advertising, determine the extent to which the onus is on the media company to assure that advertising using AI-generated content is properly labeled.”

As the evolution of technology continues to blur the lines between reality and artificiality, radio stations find themselves in potentially precarious positions. These days, it is crucial to exercise due diligence in vetting political advertisements, particularly those employing advanced AI technologies. In this era of rapid technological advancement, staying informed and cautious can prove pivotal in avoiding potential pitfalls and legal challenges.