(By Tom Webster) Recently, the Pew Research Center published an update on the state of telephone research and noted that they continue to move more and more of their polling to online panels. We at Edison Research have also moved to incorporate more online samples into our survey efforts. In some cases, we continue to do telephone surveys (with mobile phone sample); in others, we pursue a hybrid approach combining telephone and online sources.

A point that I have seen glossed over from the Pew article is this: phone surveys haven’t stopped working, and they haven’t gotten “worse.” In fact, the article makes the opposite point. To date, there is no evidence that a decline in response rate has led to a decline in the quality of phone research. It’s just getting more expensive to conduct. Today, despite the challenges with telephone research, it remains the most accurate form of sampling. And don’t take my word for it — or an uninformed word to the contrary — it continues to be settled law as far as professional survey researchers are concerned.

Proper telephone sampling incorporating mobile phone users remains the best methodology to produce representative, projectable research. The issue isn’t efficacy, the issue is cost, and that issue is certainly relevant to the vast majority of radio stations in America. Pew concludes that telephone research is largely cost-prohibitive, but that’s certainly not 100% the case. For example, Edison employed both RDD (Random Digital Dial) and RBS (Registration-Based Sampling) with telephone sampling during our recent, successful work providing vote count and exit polling data for the National Election Pool, and it was reassuringly and predictably accurate — a fact that Pew also noted.

We also continue to employ telephone sampling in our industry-standard Infinite Dial research series. Thanks to our partnership with Triton Digital, we have been able to maintain the rigorous standards for which the Infinite Dial is known.

In short, phone surveys still work, and online sampling continues to get better as we develop more sophisticated ways to weight and frame that data. My colleagues at Edison have, in fact, recently presented a paper to the American Association for Public Opinion Research (AAPOR) on the novel way we have developed to weight online panel data that brings it more in line with how we would expect a representative online sample to behave. Telephone and online sampling are both effective in the appropriate circumstances, and both are important tools in our quest for the truth.

But there’s a type of sampling we see out there that we would never rely on as the sole source of decision support for radio stations: surveying respondents from the email databases of those stations. Data obtained from self-selected responses from a radio station’s email database is not only not projectable to the population, but they also aren’t even projectable to the listeners of that station. Or anyone at all, actually.

Here’s why. First of all, there are always going to be trade-offs with any email database survey in that the responses are completely self-selected. It’s one thing to critique telephone research for its 6-7% response rate, but have you looked at the response rates from your email database lately? I can assure you that a 7% response rate to take a survey from your email database would be a cause for celebration. Instead, it’s very likely that email database response rates are below 1%. What’s more crowded, your voice mailbox, or your email inbox? I rest my case.

But that’s not the most dangerous pitfall. The real issue is that your email database reflects a very small percentage of the total universe out there, and a potentially slanted one at that. Consider what percentage of your database signed up to enter a contest, for example. And consider what percentage of your total audience actually plays these contests. You see the problem?

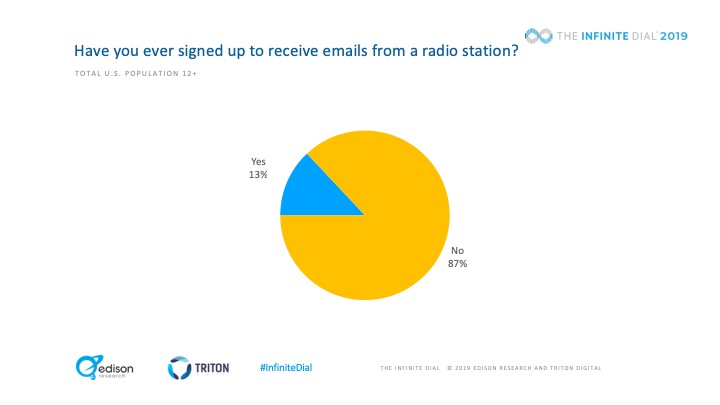

In our 2019 Infinite Dial survey, we conducted a 4,000-person supplemental online survey from our high-quality national panel, and we weighted that data to our nationally representative Infinite Dial demographics. In this additional online survey, we asked online Americans if they had ever signed up to be part of a radio station database. Here’s what we came up with:

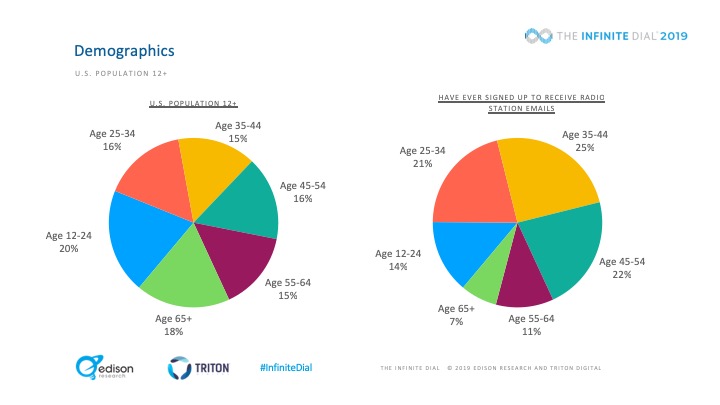

That’s right: 13% say they have at some point signed up to be part of a radio station email list. In fact, given that an online panel is already composed of “joiners” (people who agree to be part of that panel) it is highly likely that 13% is a significant overstatement. What’s more, those respondents who had signed up for a radio database were very much clustered in the 35-54 demographic, which is increasingly commercial radio’s self-fulfilling prophecy. Respondents to a database survey are not only a tiny, distorted percentage of the database as a whole, but the database as a whole also excludes at least 87% of your online audience. And, of course, 100% of your offline audience. But Nielsen doesn’t exclude them. They go to the pains of address-based sampling and other measures to ensure that all of those “invisible” people are in fact getting diaries and meters.

That’s right: 13% say they have at some point signed up to be part of a radio station email list. In fact, given that an online panel is already composed of “joiners” (people who agree to be part of that panel) it is highly likely that 13% is a significant overstatement. What’s more, those respondents who had signed up for a radio database were very much clustered in the 35-54 demographic, which is increasingly commercial radio’s self-fulfilling prophecy. Respondents to a database survey are not only a tiny, distorted percentage of the database as a whole, but the database as a whole also excludes at least 87% of your online audience. And, of course, 100% of your offline audience. But Nielsen doesn’t exclude them. They go to the pains of address-based sampling and other measures to ensure that all of those “invisible” people are in fact getting diaries and meters.

The truly dangerous part about this is what the former Secretary of Defense Donald Rumsfeld would call the “unknown unknowns.” Since you cannot know how the vast majority of your potential audience responds, because they are excluded from the possibility of being sampled, you cannot even begin to estimate the bias of the sample you are able to collect. The non-response bias of a radio station database survey renders that data essentially meaningless because you don’t know how to frame it. You can’t say they are “your best customers” or “your most engaged audience” or the most “tech-savvy” listeners. You can’t say any of that. You just don’t know.

Proponents of this kind of research will argue that it’s better than nothing, or that it’s “directionally accurate.” It is no such thing. Believing that it is directionally accurate, absent any information to characterize the non-response bias of that data, is heavily based upon a cognitive bias. It’s not better than nothing, it’s worse than nothing if it causes you to make decisions that might continue to make an increasingly smaller part of your database happy, while turning off the 90%+ who weren’t even asked, and the vast, silent majority of your database that didn’t bother responding.

Now, are there research uses for a station’s email database? Of course. We’ve certainly surveyed the member databases of public media stations, for example, but they were included into those databases for very different reasons (they donate to the station and aren’t just registered for the Birthday Game), have higher response/engagement rates, and — this is important — we always pair those surveys with a concurrent market study to calibrate that data. Similarly, we have surveyed customer/user databases for numerous non-radio companies. But a licensed user of software is not the same thing as a contest entrant who might not even listen to the radio, let alone your station.

There is no question that conducting research for local radio stations has gotten more challenging over the past few years — challenging, but not impossible, as Edison and some of our peers in the research space continue to work with clients to find workable solutions. But it’s time for the radio industry to stop deluding itself about the quality of these database polls. Cost is the only reason to do them, not the ineffectiveness of other forms of research. And while those costs may be small, radio station databases are not “good enough.” We must be vigilant against the slippery slope argument which suggests that since all research is flawed, you might as well go with the cheapest or easiest solution. All humans are flawed, too, but that doesn’t mean we are all equally good.

Continuing to make decisions or even attempt to characterize the radio industry on the basis of this kind of research will lead the industry increasingly into what I call the “optimization trap,” as we continue to make a smaller and smaller body of listeners happier and happier, until the only people we are satisfying are callers 1-10.

Tom Webster is a Senior Vice President of Edison Research, a custom market research company best known as the sole providers of exit polling data during US elections for all the major news networks. He can be reached at [email protected]

GREAT thoughts, Tom! Especially your last comment about super serving “Callers 1 – 10.” One of the greatest myths in radio is, “If we delight our P1 core listeners, the rest will surely follow.” Yeah, well, sometimes…

Thanks for the thoughtful comment, Bob. I am of course referring to strategic/market research or any attempt to characterize your audience or market as a whole. I do think there is an open question about your point, though:

Are we sure that people who sign up to receive promotions from a radio station are active listeners? Does digital activity correlate to listening activity? What does “active” really mean? And how do we know?

And don’t we want passive listeners who lie on the couch for 10 hours with a PPM clipped to their Snuggies? 🙂

Thanks again for commenting, Bob.

Excellent point Tom, and of course one would always need to re-screen anyone prior to an online survey, regardless of where the data originates. Just using them blindly because they belong to the station database would be a serious flaw, indeed. Thanks much for that clarification and caveat.

From one who spent half my career in broadcast research, as well… I must take issue with one point, Tom. There are times when indeed, you want to look at your most active listeners. If 80% of ratings come from the smallest, 20% of your cume we call the core, then it makes very good sense to examine those highly active listeners. They not only listen more often, but can give you faster feedback on new music; the most dangerous product any station plays. When conducting online callout using station databases, we saw enormously accurate results that not only correlated with traditional telephone callout, but were significantly more stable from week to week. After all, who else can give faster, more “active” and accurate results than the most active listener. In fact, we always recommended clients understood just how active the results were and not over respond to “burn” data, which also appears sooner. But honestly, when dealing with current music, I want to know the hits and stiffs faster than the phone research can relay. The active, station base data is an extremely cost efficient, accurate method of doing so. I also found that station databases are extremely useful when recruiting smaller, focus groups designed to hone in on station specifics.

Ken Benson’s P1 Media/Research uses station databases and various “crowd research” methods and when examining their results and seeing the clear success of his radio clients, it is hard to deny. Now – if you want to use station databases for strategic market and perceptual studies, I would strongly agree with you and discourage that as a viable option for all the reasons you mentioned.

Bob Lawrence